Top Risks of Delegating Decisions in Web3 to AI Agents

Top Risks of Delegating Decisions in Web3 to AI Agents the rapid convergence of artificial intelligence and decentralized technologies has created a new frontier in the digital economy. As Web3 ecosystems evolve, developers and investors are increasingly turning to AI agents to automate tasks, manage portfolios, execute trades, and even participate in governance decisions. The promise is compelling: intelligent systems that can analyze massive datasets, respond to market changes in real time, and eliminate human error. However, the growing trend of delegating decision-making authority to autonomous AI systems introduces a complex set of risks that cannot be ignored.

The concept of autonomous agents operating within decentralized finance, smart contract platforms, and DAO governance structures may sound futuristic, but it is already becoming a practical reality. From algorithmic trading bots in DeFi protocols to AI-driven treasury management tools, the integration of artificial intelligence into blockchain networks is accelerating. Yet, with this innovation comes new vulnerabilities that can threaten security, trust, and decentralization.

Understanding the top risks of delegating decisions in Web3 to AI agents is crucial for developers, investors, and protocol designers alike. While AI promises efficiency and scalability, it also raises questions about accountability, transparency, manipulation, and systemic risk. This article explores the major challenges associated with AI-driven decision-making in Web3 and explains why careful design and oversight are essential.

Top Risks of Delegating Decisions in Web3 to AI

AI agents are being introduced into Web3 to handle tasks that were previously performed by humans. These tasks include market analysis, token swaps, liquidity management, yield optimization, and governance voting. In many cases, AI-driven automation can improve efficiency and reduce emotional or irrational decision-making.

Within decentralized applications, AI systems can monitor blockchain data continuously, identify opportunities, and execute transactions instantly. For example, an AI agent may detect arbitrage opportunities across multiple DeFi protocols and execute trades within seconds. Similarly, AI-powered treasury managers can rebalance assets based on market conditions.

However, this automation also means that decisions are being made by algorithms that may not fully understand the broader implications of their actions. When AI agents control large amounts of capital or governance power, even small errors can create significant consequences.

AI in DAO Governance

Decentralized autonomous organizations are increasingly experimenting with AI-based governance tools. These tools can analyze proposals, recommend voting strategies, and even cast votes automatically on behalf of token holders. While this may increase participation and efficiency, it also introduces new risks related to manipulation and centralization.

If a large number of token holders rely on the same AI system, governance decisions could become concentrated in the hands of a single algorithm. This undermines the principle of decentralization and creates a potential single point of failure.

Smart Contract Vulnerabilities Amplified by AI

Automated Decision Execution

One of the most critical risks of delegating decisions to AI agents lies in the interaction between AI systems and smart contracts. Smart contracts execute automatically once certain conditions are met. When an AI agent triggers these conditions, there is often no human oversight to intervene if something goes wrong.

For example, an AI trading agent might misinterpret market signals and execute a series of high-risk trades. Because smart contracts operate without human intervention, the losses can occur instantly and irreversibly.

In the context of blockchain automation, the speed and autonomy of AI systems can turn small mistakes into large-scale financial losses.

Exploitable Logic Flaws

AI agents rely on data inputs and predefined algorithms. If these inputs are manipulated or the algorithms contain flaws, attackers can exploit the system. In a decentralized ecosystem, where transactions are transparent and permanent, such exploits can be devastating.

Attackers may use adversarial techniques to feed misleading data to AI agents, causing them to make harmful decisions. This type of manipulation can lead to drained liquidity pools, mispriced assets, or governance attacks.

Data Manipulation and Oracle Risks

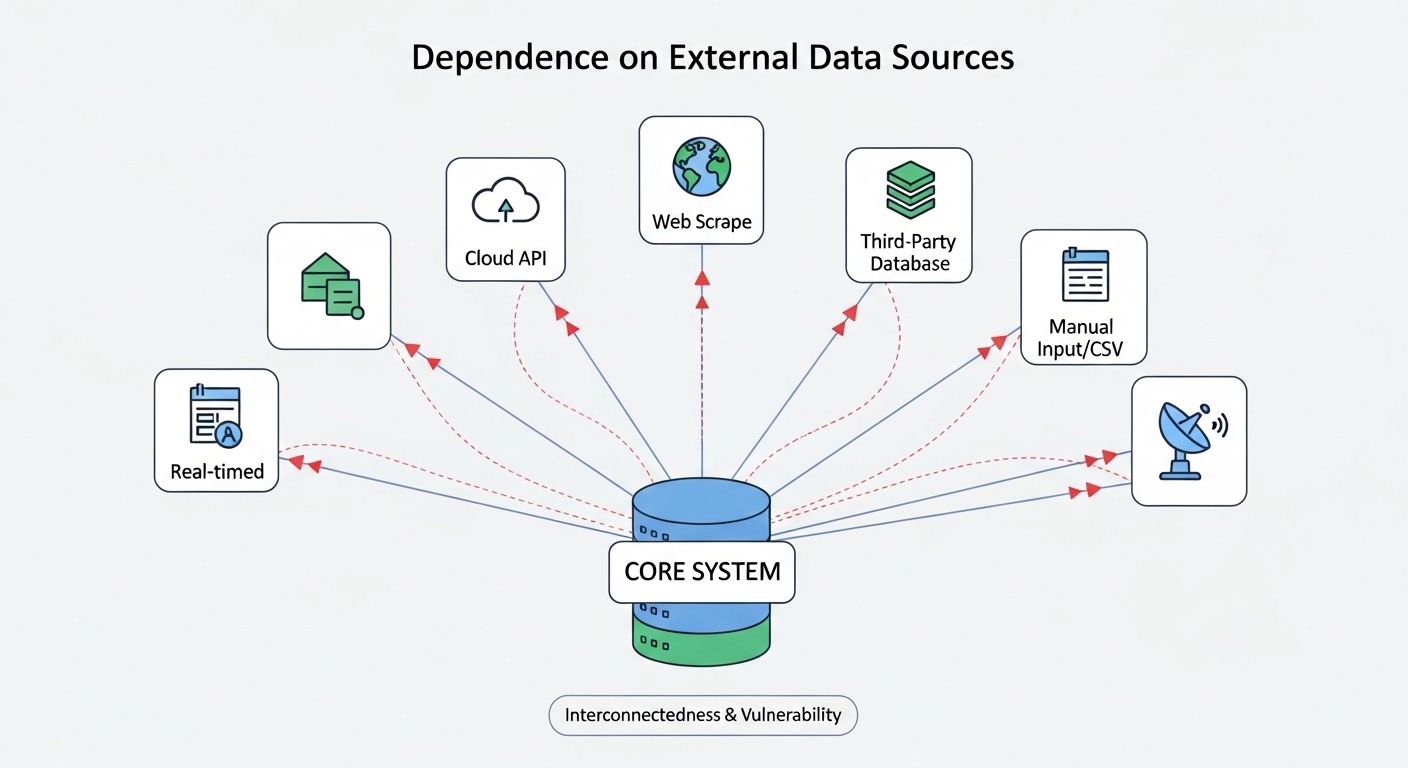

Dependence on External Data Sources

AI agents operating in Web3 often rely on external data sources known as oracles. These oracles provide information about prices, market conditions, and other off-chain data. If the oracle data is inaccurate or manipulated, the AI agent’s decisions will also be flawed.

In traditional systems, human oversight can detect anomalies. However, autonomous AI agents may act instantly on incorrect data, amplifying the impact of misinformation.

Market Manipulation Through AI Exploits

Sophisticated attackers can manipulate markets in ways that trick AI systems into making poor decisions. For example, an attacker might create artificial price movements to trigger AI-driven trading strategies. Once the AI agents react, the attacker can profit from the resulting volatility. This type of AI-driven market manipulation poses a serious threat to the stability of crypto markets and DeFi protocols.

Centralization Risks Hidden Behind AI Tools

Concentration of Decision-Making Power

One of the fundamental goals of Web3 is decentralization. However, when many users rely on the same AI agent or decision-making model, power becomes concentrated. If a single AI system influences thousands of wallets or DAO votes, it effectively becomes a central authority. This centralization contradicts the core philosophy of blockchain technology. It also creates systemic risks, as a failure or compromise of the AI system could impact a large portion of the network.

Dependency on Proprietary Algorithms

Many AI agents are developed by private companies and operate using proprietary algorithms. Users often have little visibility into how these systems make decisions. This lack of transparency undermines the principles of trustless systems and open-source protocols.

If the AI provider changes its algorithm or experiences a security breach, users may suffer losses without understanding why.

Security Threats and AI Exploitation

AI as a Target for Hackers

AI agents managing digital assets become high-value targets for hackers. If an attacker gains control of an AI system, they can manipulate its decisions and redirect funds.

Unlike human operators, AI agents cannot detect social engineering attacks or suspicious behavior unless they are specifically programmed to do so. This makes them vulnerable to sophisticated exploitation techniques.

Adversarial Attacks on AI Models

Adversarial attacks involve manipulating inputs to cause AI systems to make incorrect decisions. In Web3, attackers may craft transactions or data patterns designed to trick AI agents.

For example, a malicious actor might create a sequence of trades that confuses an AI’s pattern recognition model. The AI may interpret the activity as a profitable opportunity and execute a large transaction that benefits the attacker.

Accountability and Legal Uncertainty

Who Is Responsible for AI Decisions?

When an AI agent makes a bad decision, determining responsibility becomes complicated. Is the developer accountable? The user who deployed the AI? The DAO that approved its use?

In traditional finance, there are clear lines of accountability. In decentralized governance, responsibility is often distributed across many participants. Introducing autonomous AI agents makes this issue even more complex.

Regulatory Challenges

Regulators are still trying to understand both AI and blockchain technologies. When these two fields intersect, the legal landscape becomes even more uncertain. Governments may impose new rules on AI-driven financial systems, potentially affecting Web3 projects.

This regulatory uncertainty adds another layer of risk for developers and investors.

Loss of Human Judgment in Critical Decisions

Overreliance on Algorithmic Decisions

AI systems are excellent at processing data, but they lack human intuition and ethical reasoning. In complex or unpredictable situations, human judgment can be essential.

Delegating all decisions to AI agents may lead to outcomes that are technically correct but ethically or strategically flawed. For instance, an AI might prioritize short-term profits over long-term sustainability.

Reduced User Awareness

When users rely heavily on AI agents, they may become less aware of how their assets are being managed. This reduced awareness can lead to complacency and poor risk management.

In automated crypto trading environments, users might not notice warning signs until it is too late.

Systemic Risks and Network-Wide Failures

Cascading Effects of AI Errors

If multiple AI agents use similar strategies, a single market event could trigger simultaneous actions across the network. This can lead to cascading failures, where one event causes a chain reaction of losses.

Such scenarios are similar to flash crashes in traditional markets, but the speed of blockchain transactions could make the impact even more severe.

Homogenization of Strategies

When many AI agents follow similar models, market diversity decreases. This homogenization increases systemic risk because the entire ecosystem may respond to events in the same way.

In a decentralized environment, diversity of strategies is essential for stability. Overreliance on AI could undermine this balance.

Ethical and Governance Concerns

Bias in AI Decision-Making

AI systems are trained on historical data. If that data contains biases, the AI may replicate those biases in its decisions. In Web3 governance, this could lead to unfair outcomes or the marginalization of certain participants.

For example, an AI voting agent might consistently favor proposals that benefit large token holders, reinforcing existing power imbalances.

Loss of Community Control

Web3 was built on the idea of community-driven governance. Delegating decisions to AI agents risks shifting control away from human participants.

If AI systems dominate decision-making processes, the role of the community may diminish, weakening the social foundations of decentralized networks.

Conclusion

The integration of artificial intelligence into Web3 represents one of the most significant technological shifts in the blockchain space. AI agents offer powerful capabilities, from automated trading to governance participation. They promise efficiency, speed, and data-driven decision-making that could transform decentralized ecosystems.

However, the top risks of delegating decisions in Web3 to AI agents are substantial. From smart contract vulnerabilities and data manipulation to centralization threats and legal uncertainty, these risks highlight the need for caution. Autonomous AI systems can amplify errors, create new attack surfaces, and undermine the principles of decentralization if not carefully designed and monitored.

The future of Web3 will likely involve a hybrid approach, where AI agents assist human participants rather than replace them entirely. Transparency, accountability, and robust security measures will be essential to ensure that AI enhances rather than harms decentralized ecosystems. As the technology continues to evolve, developers, regulators, and users must work together to create frameworks that balance innovation with responsibility.

FAQs

Q: Why are AI agents being used in Web3 decision-making?

AI agents are being used in Web3 because they can process large amounts of blockchain data quickly and make automated decisions. They are particularly useful in areas such as trading, liquidity management, and governance participation. By removing human emotion and delay, AI systems can respond instantly to market changes. However, this efficiency also comes with risks, as the AI may act on incorrect data or flawed logic without human oversight.

Q: Can AI agents be hacked in decentralized systems?

Yes, AI agents can become targets for hackers, especially if they control valuable assets or governance rights. Attackers may attempt to manipulate the data inputs of the AI, exploit vulnerabilities in its algorithms, or gain direct access to its control mechanisms. Because AI agents operate autonomously, successful attacks can cause rapid and irreversible losses.

Q: How does AI centralize power in a decentralized ecosystem?

AI can centralize power when many users rely on the same decision-making models or tools. If a single AI system influences thousands of wallets or votes, it effectively becomes a central authority. This undermines the core principle of decentralization and creates a single point of failure that could impact the entire network.

Q: What role do oracles play in AI-driven Web3 systems?

Oracles provide external data that AI agents use to make decisions. This data may include asset prices, market conditions, or real-world information. If oracle data is manipulated or inaccurate, the AI agent will act on false information. This makes secure and reliable oracles essential for safe AI-driven decision-making in Web3.

Q: Is it safe to fully delegate financial decisions to AI agents?

Fully delegating financial decisions to AI agents carries significant risks. While AI can improve efficiency and speed, it lacks human intuition and ethical judgment. Errors, data manipulation, or algorithmic flaws can lead to serious financial losses. A balanced approach that combines AI assistance with human oversight is generally considered safer.